About

Open-source quad-core camera effortlessly adds powerful machine vision to all your PC/Arduino/Raspberry Pi projects.

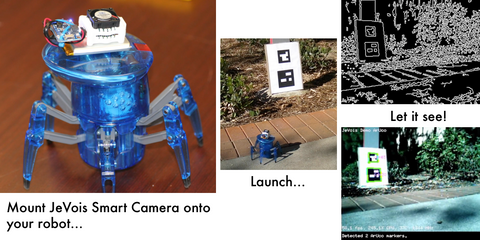

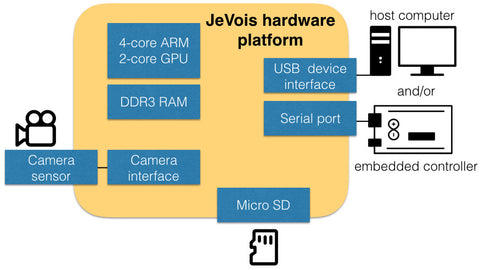

JeVois = video sensor + quad-core CPU + USB video + serial port, all in a tiny, self-contained package (28 cc or 1.7 cubic inches, 17 grams or 0.6 oz). Insert a microSD card loaded with the provided open-source machine vision algorithms (including OpenCV, TensorFlow Lite and Darknet deep neural networks, DLib, and many others), connect to your desktop, laptop, and/or Arduino, and give your projects the sense of sight immediately.

Learn computer vision with JeVois by programming your own machine vision modules live on JeVois using Python + OpenCV.

JeVois started as an educational project, to encourage the study of machine vision, computational neuroscience, and machine learning as part of introductory programming and robotics courses at all levels (from K-12 to Ph.D.). At present, these courses often lack a machine vision component. This is mainly, we believe, because there is no simple machine vision device one can use together with the Raspberry Pi, Arduino, or similar device used in these courses. JeVois aims to fill this gap by providing a self-contained, configurable machine vision engine that can deliver both visual outputs of how it is analyzing what it sees (useful to understand the algorithms), and text outputs over a serial link that describe what it has found (useful to send to a micro-controller that can control a robot).

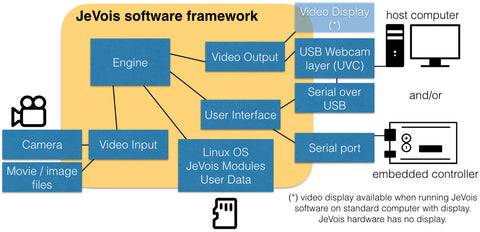

The JeVois framework operates as follows: video is captured from the camera sensor, processed on the fly through some machine vision algorithm directly on the camera's own processor, and the results are streamed over USB to a host computer and/or over serial to a micro-controller.

To the host computer, the JeVois smart camera is just another USB camera. Different vision algorithms are selected by changing USB camera resolution and framerate. Users or machines can also interact with the JeVois smart camera, change its settings, or listen for text-based vision outputs over serial link (both hardware serial and serial-over-USB are supported).

Three major modes of operation:

- Demo/development mode: the smart camera outputs a demo display over USB that shows the results of its analysis, possibly along with simple results communicated over serial port (e.g., coordinates and content of any QRcode that has been identified).

- Text-only mode: the smart camera provides no USB output, but only text strings, for example, commands for a pan/tilt controller, a robot, or a home automation system.

- Pre-processing mode: the smart camera outputs video that is intended for machine consumption, for example an edge map computed over the video frames captured by the camera sensor, or a set of image crops around the 3 most interesting objects in the scene. This video can then be further processed by the host computer, for example, using a massive deep neural network running on a cluster of high-power GPUs to recognize the three most interesting objects that the smart camera has detected. Text outputs over serial are of course also possible in this mode.

But, really, anything is possible, since the whole JeVois software framework is open-source.

Long-term vision

With JeVois we aim to enable everyone to use machine vision in embedded, IoT, robotics, and educational projects. We continue to work on the following open-source software aspects:

- Software development is gearing up to provide a repository of machine vision modules shared by the community. This is the equivalent of an app store for JeVois.

- We are developing a curriculum of activities around the JeVois camera for all levels (from kindergarten to Ph.D.). Activities will start at the entry level (just point the camera towards something and see whether it can identify it), to learning how the underlying machine vision algorithms work, to modifying existing algorithms, and finally learning vision theory and developing your own algorithms. We will leverage significant material we have developed by teaching robotics, vision, and artificial intelligence courses at the undergraduate and graduate level over the past 15 years.

- Our overall mission is an educational and outreach one. We hope that JeVois will help us and others translate the latest vision research results into working machines. JeVois will be integrated to our outreach activities, which include working with neighboring K-12 schools, our Robotics Open House program, and others.

Please see http://jevois.org for more details and updates.

Contributions and novelty

In principle, you could assemble a working machine vision system using existing embedded computer boards, adding a camera, and programming a USB output. In fact, this how we started... - er, two years ago!

The hurdles we encountered and solved in creating JeVois are as follows:

- Linux support for camera chips is often very limited. We wrote our own highly efficient kernel driver and figured out how to configure camera chip registers to support the controls available on the camera sensor chip (exposure, frame rate, etc). Most camera drivers in the Linux kernel only support one or a few resolutions and frame rates, many do not support controls such as manual exposure, etc. You could use a USB camera, for which support for the controls has been standardized, but not many support 60 or 120 frames/s, and they would increase the overall size, weight, power and cost of the system. Our kernel camera driver exploits hardware that is integrated into our CPU chip for direct image capture from a camera sensor. This is faster, provides lower latency (time between image capture and start of rocessing), and uses less CPU resources than attaching a USB camera sensor.

- Linux support for the device-side of video streaming over USB is virtually non-existent (in open source). This is software that you need to run on the smart camera's CPU to make it appear as if it was a USB camera to a connected host computer. A webcam gadget module has been present in the Linux kernel source tree for several years, but its functionality is very limited, and, as we discovered, its very core logic is broken. We developed a fully working device-side kernel USB Video Class driver, with highly efficient data streaming and pass-through support for video resolutions, pixel formats, frame rates, and camera controls (when users change those on the host computer, the changes are relayed to the camera sensor chip).

- Many small embedded computer boards exist, but software support is often limited, and quality sometimes is low. We had to fix kernel USB drivers, kernel GPU drivers, kernel camera drivers, and many other elements in the Linux kernel to make it work flawlessly while supporting all the features listed above. Small embedded boards also are no match for our solution in terms of total system size (including camera, connectors, fan), power consumption, speed and video latency (delay between capturing an image and presenting the processing results to the host computer), and out-of-the box enjoyment and reliability. For example, one has to face the reality that a quad-core chip will just overheat under load if it does not have a fan or very, very large heatsink.

In summary, because we are developing the hardware, software and mechanical design jointly, we are able to deliver a highly optimized, plug-and-play, high satisfaction solution that just does not exist anywhere else today.